Deepfake AI technology, which employs advanced artificial intelligence to create hyper-realistic but fabricated media content, has seen significant advancements in recent years. This technology utilizes techniques such as Generative Adversarial Networks (GANs) to manipulate images, videos, and audio, producing content that is often indistinguishable from reality. While deepfakes have legitimate applications in entertainment and education, they also pose substantial ethical and societal challenges.

The entertainment industry has embraced deepfake technology for purposes like de-aging actors or recreating historical figures. For instance, the film Here, starring Tom Hanks and Robin Wright, utilized de-aging technology to make the actors appear younger. However, this application has sparked debate among industry professionals. Actress Lisa Kudrow criticized the film as an endorsement of AI, expressing concerns about the implications for human actors and the potential reduction of opportunities for newcomers in the industry.

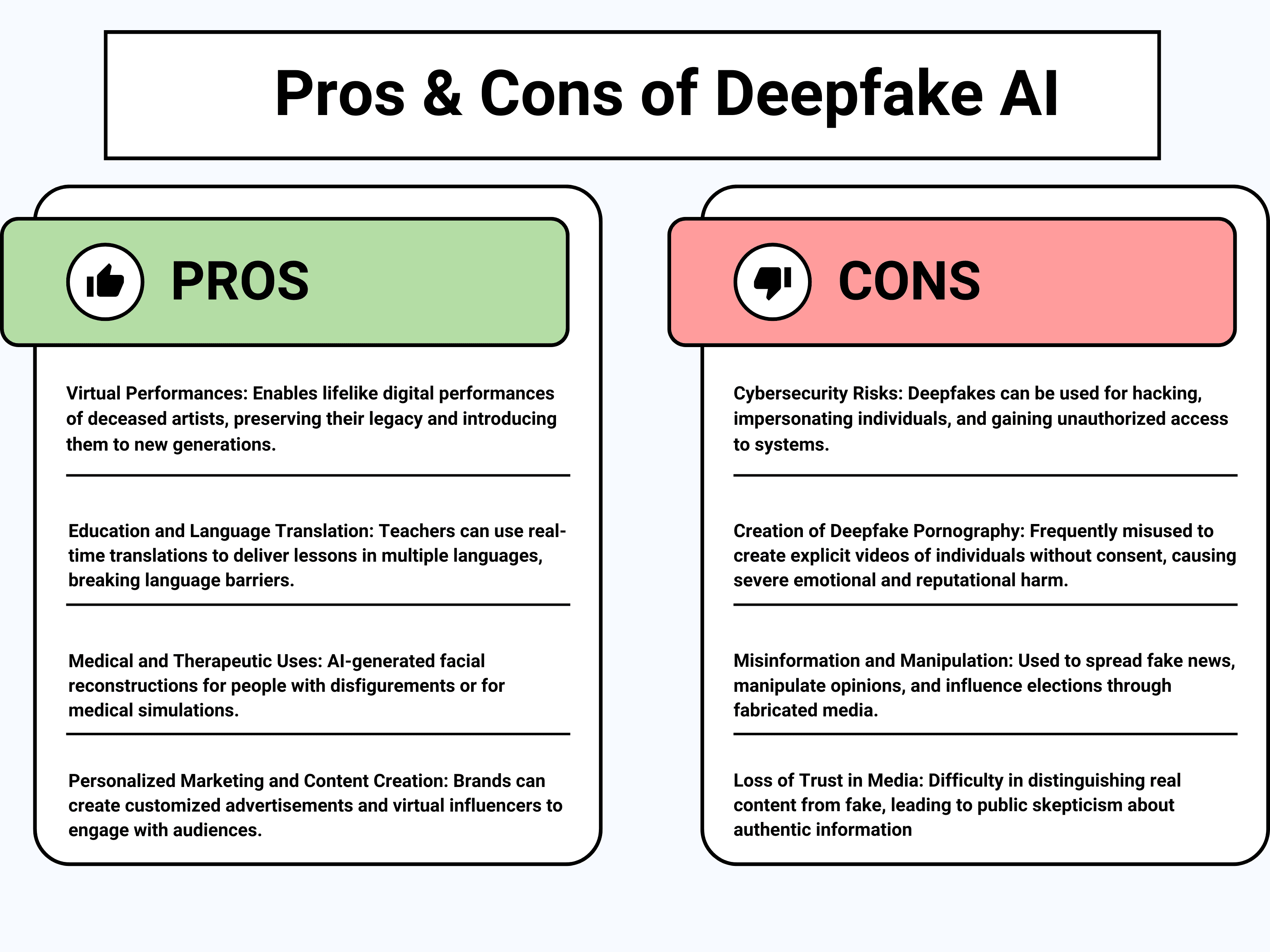

Beyond entertainment, deepfakes have been weaponized for malicious purposes, including the creation of non-consensual explicit content and the spread of misinformation. Websites like thispersondoesnotexist.com further highlight the advancements in AI, where every refresh generates a lifelike image of a person who doesn’t exist in reality. While fascinating, such technology also poses risks, including the ability to manipulate people through social media, hacking, and even compromising elections by spreading fake narratives. These actions raise pressing privacy concerns, prompting questions like: after generating a deepfake video, who owns the content?

The financial sector is increasingly vulnerable to deepfake threats. Cybercriminals are leveraging deepfake technology to impersonate bank officials or customers, facilitating fraudulent transactions and identity theft. For example, sophisticated deepfake audio can mimic a CEO’s voice to authorize large fund transfers, deceiving employees into executing unauthorized transactions. The Financial Crimes Enforcement Network (FinCEN) has warned that deepfakes are playing a larger role in fraud targeting banks and credit unions.

Ethical dilemmas surrounding deepfakes extend to their use in sensitive contexts, such as recreating deceased individuals. If a deepfake of a deceased person is made, it is vital to obtain explicit permission from their closest relatives or loved ones. Without proper consent, such applications could lead to significant emotional distress and legal repercussions. Moreover, as deepfakes become increasingly sophisticated, there is a looming fear that distinguishing real from fake will become nearly impossible, eroding public trust in digital content.

Addressing these challenges requires a multifaceted approach. Advancements in detection methods are crucial; AI-based detection tools and algorithms are being developed to identify and limit the spread of malicious deepfakes. Some of the best tools for detecting deepfakes include Deepware Scanner, Sensity AI, Microsoft Video Authenticator, and Amber Authenticate. Legislative measures must evolve to address these complexities. For example, the European Parliament has moved to enhance detection and prevention of deepfakes, responding to the growing threats posed by sophisticated AI technology. Irish MEP Maria Walsh emphasized the severe consequences of deepfake content and called for stronger legal measures, including stringent penalties for creators and distributors. Additionally, discussions about ethics, regulations, and policies must lead to the establishment of clear laws to manage misuse effectively.

In conclusion, while deepfake AI technology offers innovative possibilities, it also presents significant ethical and societal challenges. History shows that with every disruptive technology, initial fears often give way to eventual understanding and utility. People may fear that deepfakes will destroy the future, but as regulations evolve and awareness grows, society will likely find ways to benefit from this technology responsibly. Balancing the benefits with the need to protect individuals is essential. Ongoing efforts in detection, regulation, and public awareness are critical to mitigating the risks associated with deepfakes and ensuring that this technology is used ethically and responsibly. As the future unfolds, it promises to be both weird and wonderful, with deepfake technology serving as a double-edged sword that must be wielded with care.